This research project is structured as a multi-layer alpha discovery and evaluation pipeline.

The goal is to develop predictive trading signals (“alphas”), train models that adapt to market conditions, and validate whether such signals can translate into robust portfolio performance.

Traditional quant strategies rely on hand-crafted indicators or simple factor models. However, modern markets are increasingly noisy and regime-dependent, which reduces the persistence of static signals.

To address this, we combine both classical alpha formulas (from academic and practitioner literature) and data-driven machine learning methods into a layered research framework that emphasizes interpretability, adaptability, and realistic backtesting.

2. Data Description & Objective

The dataset spans S&P 500 equities (2015–2025) and integrates multiple data streams:

OHLCV data (Open, High, Low, Close, Volume) – baseline inputs for technical signals.

Derived technical indicators such as RSI, MACD, Bollinger Bands, volatility measures.

Fundamental ratios including earnings, book value, debt-to-equity, dividend yield, and growth rates.

Macroeconomic and risk sentiment variables such as VIX and CPI.

The data was preprocessed with hierarchical imputation, time-aware forward-filling, and normalization techniques to ensure completeness across firms and horizons.

The research objectives for this dataset are threefold:

Construct predictive forward returns at multiple horizons.

Generate alpha signals from both academic literature and proprietary feature engineering.

Evaluate whether these signals provide statistically and economically significant predictive power in equity markets.

2.1 Market Data Acquisition Report – S&P 500 OHLCV

From January 1, 2015 to today, we compiled a complete historical OHLCV dataset for the S&P 500. The process began with retrieving the official ticker list (503 symbols, including adjustments for tickers with dots to API-friendly format).

Using the EOD Historical Data API, we sequentially pulled and stored each symbol’s full trading history. A short delay between requests ensured smooth operation within API limits. Data was saved in parquet format for efficiency, with each file containing date-sorted daily records alongside the ticker identifier.

By the end, the dataset was successfully assembled—503 tickers, spanning over a decade of trading data—ready for downstream analysis, model training, and alpha research.

Code

import pandas as pdimport requestsfrom pathlib import Pathimport timefrom datetime import datetime# ========== CONFIG ==========EOD_API_KEY ="684483f54bca11.06671973"# <-- Replace with your actual EODHD keySTART_DATE ="2015-01-01"END_DATE = datetime.today().strftime("%Y-%m-%d")RATE_LIMIT_SECONDS =1.1# safe delay between calls# ========== FOLDER SETUP ==========data_root = Path("data")ohlcv_dir = data_root /"ohlcv"ohlcv_dir.mkdir(parents=True, exist_ok=True)# ========== STEP 1: Get S&P 500 Tickers ==========print("📥 Downloading S&P 500 ticker list...")sp500_df = pd.read_html("https://en.wikipedia.org/wiki/List_of_S%26P_500_companies")[0]tickers = [f"{sym.replace('.', '-')}.US"for sym in sp500_df['Symbol']]print(f"✅ Loaded {len(tickers)} tickers.")# ========== STEP 2: Define OHLCV Download Function ==========def download_eod_ohlcv(ticker, start=START_DATE, end=END_DATE): url =f"https://eodhistoricaldata.com/api/eod/{ticker}" params = {"api_token": "684483f54bca11.06671973","from": start,"to": end,"fmt": "json" }try: response = requests.get(url, params=params) response.raise_for_status() data = response.json() df = pd.DataFrame(data) df['date'] = pd.to_datetime(df['date']) df = df.sort_values('date') df['ticker'] = ticker.replace('.US', '')return dfexceptExceptionas e:print(f"[ERROR] Failed to fetch {ticker}: {e}")returnNone# ========== STEP 3: Loop Over Tickers and Save ==========print("📡 Starting EODHD OHLCV downloads...")for i, ticker inenumerate(tickers):print(f"[{i+1}/{len(tickers)}] Downloading {ticker}...") df = download_eod_ohlcv(ticker)if df isnotNoneandnot df.empty: filepath = ohlcv_dir /f"{ticker.replace('.', '_')}.parquet" df.to_parquet(filepath, index=False) time.sleep(RATE_LIMIT_SECONDS) # prevent hitting EOD rate limitsprint("✅ All tickers processed and saved.")

2.2 Data Retrieval Summary – SPY (S&P 500 ETF)

We assembled a complete daily OHLCV history for the SPDR S&P 500 ETF (SPY) spanning January 3, 2000 through today. The dataset, sourced from EOD Historical Data, contains over two decades of market activity precisely 6 columns: open, high, low, close, adjusted close, and volume.

The earliest record shows SPY opening at 148.25 on January 3, 2000, closing at 145.44 with 8.16 million shares traded. From there, the data traces the ETF’s journey through market cycles, from the dot-com crash to the global financial crisis, the pandemic shock, and the subsequent bull runs.

The full dataset is stored in efficient parquet format, sorted chronologically, and ready for time-series analysis, trend studies, and model development.

The full historical record for all S&P 500 constituents has been successfully aggregated into a single, unified dataset. This compilation merges daily OHLCV and adjusted close data for every ticker, standardized in structure and cleaned for completeness.

Code

import pandas as pdfrom pathlib import Path# Set input/output pathsohlcv_dir = Path("data/ohlcv")output_path = Path("data/sp500_ohlcv_combined.parquet")# Helper function to load & standardize one filedef load_and_clean_parquet(file): df = pd.read_parquet(file)# Standardize column names to lowercase df.columns = [col.lower() for col in df.columns]# Ensure 'adjusted_close' existsif'adjusted_close'notin df.columns and'close'in df.columns: df['adjusted_close'] = df['close']# Filter to expected columns expected_cols = ['date', 'open', 'high', 'low', 'close', 'adjusted_close', 'volume', 'ticker'] df = df[[col for col in expected_cols if col in df.columns]]# Ensure date is datetime format df['date'] = pd.to_datetime(df['date'], errors='coerce')# Drop any rows missing key fields df = df.dropna(subset=['date', 'adjusted_close', 'ticker'])return df# Load and combine all tickerscombined_df = pd.concat([ load_and_clean_parquet(file)forfilein ohlcv_dir.glob("*.parquet")], ignore_index=True)# Sort for long-format: date first, then tickercombined_df = combined_df.sort_values(['date', 'ticker']).reset_index(drop=True)# Save to final combined Parquet filecombined_df.to_parquet(output_path, index=False)print(f"✅ Combined data saved to {output_path}")

2.4 Finalized S&P 500 Historical Dataset

The S&P 500 historical database has been refined to ensure analytical integrity. Tickers with insufficient history (<500 trading days) were excluded, and gaps within each series were forward-filled to maintain continuity without introducing artificial backward data.

The result is a clean, liquid-universe dataset—chronologically ordered and free from sparsity—stored in parquet format for fast, large-scale quantitative research and backtesting.

Code

# Reload raw filedf = pd.read_parquet("data/sp500_ohlcv_combined.parquet")# Drop sparse tickers (fewer than 500 observations)min_days =500valid_tickers = df['ticker'].value_counts()[lambda x: x > min_days].indexdf = df[df['ticker'].isin(valid_tickers)]# Forward-fill within each ticker (no backfill)df = ( df.sort_values(['ticker', 'date']) .groupby('ticker', group_keys=False) .apply(lambda g: g.ffill()) .reset_index(drop=True))# Save final cleaned versionfinal_path ="data/sp500_ohlcv_combined_clean.parquet"df.to_parquet(final_path, index=False)print(f"✅ Cleaned and filtered data saved to {final_path}")

2.5 Sector-Enriched S&P 500 Dataset

The cleaned S&P 500 OHLCV data has been augmented with GICS sector and industry classifications sourced from Wikipedia. This final enriched dataset now enables sector-level and industry-level analysis, supporting more targeted factor research and cross-sectional studies.

Code

import pandas as pdfrom pathlib import Path# ========== CONFIG ==========sector_map_output = Path("data/sp500_sector_map.parquet")clean_ohlcv_path = Path("data/sp500_ohlcv_combined_clean.parquet")final_enriched_path = Path("data/sp500_ohlcv_enriched.parquet")# ========== STEP 1: Extract sector map from Wikipedia ==========print("🌐 Scraping sector/industry info from Wikipedia...")wiki_url ="https://en.wikipedia.org/wiki/List_of_S%26P_500_companies"sp500_table = pd.read_html(wiki_url, header=0)[0]# Create sector mapsector_map = sp500_table[['Symbol', 'Security', 'GICS Sector', 'GICS Sub-Industry']]sector_map.columns = ['ticker', 'company', 'sector', 'industry']sector_map['ticker'] = sector_map['ticker'].str.replace('.', '-', regex=False)sector_map = sector_map.drop_duplicates('ticker')# Save sector map to filesector_map.to_parquet(sector_map_output, index=False)print(f"✅ Sector map saved to {sector_map_output}")# ========== STEP 2: Load Cleaned OHLCV and Merge ==========print("📦 Loading cleaned OHLCV data...")df = pd.read_parquet(clean_ohlcv_path)print("🔗 Merging with sector info...")df = df.merge(sector_map[['ticker', 'sector', 'industry']], on='ticker', how='left')# ========== STEP 3: Save Final Output ==========df.to_parquet(final_enriched_path, index=False)print(f"✅ Final enriched data saved to {final_enriched_path}")

2.6 S&P 500 Options Chain Collection

Options chains were successfully retrieved for the majority of S&P 500 tickers, with each contract categorized by type (CALL/PUT) and tagged with expiration dates.

These parquet files, stored by ticker, provide a structured foundation for implied volatility modeling, skew analysis, and options-based market sentiment studies across the index.

Code

import osimport timeimport requestsimport pandas as pdfrom pathlib import Path# ========== CONFIG ==========EOD_API_KEY ="684483f54bca11.06671973"options_dir = Path("data/options")options_dir.mkdir(parents=True, exist_ok=True)RATE_LIMIT_SECONDS =1.1# ========== LOAD TICKERS ==========tickers_df = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")tickers = tickers_df['ticker'].drop_duplicates().tolist()# ========== FUNCTION TO GET OPTIONS ==========def get_options_chain(ticker): url =f"https://eodhistoricaldata.com/api/options/{ticker}.US" params = {"api_token": EOD_API_KEY,"fmt": "json" }try: r = requests.get(url, params=params) r.raise_for_status() data = r.json() all_calls = [] all_puts = []for expiry in data.get('data', []): # correct path to the options exp_date = expiry.get("expirationDate") options = expiry.get("options", {}) calls = pd.DataFrame(options.get("CALL", [])) puts = pd.DataFrame(options.get("PUT", []))ifnot calls.empty: calls['type'] ='CALL' calls['expirationDate'] = exp_date all_calls.append(calls)ifnot puts.empty: puts['type'] ='PUT' puts['expirationDate'] = exp_date all_puts.append(puts) all_opts = pd.concat(all_calls + all_puts, ignore_index=True) all_opts['ticker'] = tickerreturn all_opts ifnot all_opts.empty elseNoneexceptExceptionas e:print(f"[ERROR] {ticker}: {e}")returnNone# ========== LOOP TO DOWNLOAD ==========for i, ticker inenumerate(tickers):print(f"[{i+1}/{len(tickers)}] Downloading options for {ticker}...") df = get_options_chain(ticker)if df isnotNoneandnot df.empty: df.to_parquet(options_dir /f"{ticker}.parquet", index=False)print(f"✅ Saved {ticker}.parquet with {len(df)} rows.")else:print(f"⚠️ No data for {ticker}.") time.sleep(RATE_LIMIT_SECONDS)print("✅ All options chains downloaded.")

All individual S&P 500 options files were merged into a unified dataset.

Code

import pandas as pdfrom pathlib import Path# ========== PATH SETUP ==========options_dir = Path("data/options")combined_options_path = Path("data/sp500_options_combined.parquet")# ========== LOAD & COMBINE ==========def load_and_standardize_option(file): df = pd.read_parquet(file) df.columns = [c.lower() for c in df.columns]# Ensure required fields exist required = ['ticker', 'type', 'strike', 'expirationdate', 'impliedvolatility', 'openinterest', 'lasttradeprice']for col in required:if col notin df.columns: df[col] = pd.NA df['expirationdate'] = pd.to_datetime(df['expirationdate'], errors='coerce') df = df.dropna(subset=['expirationdate', 'ticker'])return df# Combine all per-ticker options chainscombined_df_options = pd.concat([ load_and_standardize_option(file)forfilein options_dir.glob("*.parquet")], ignore_index=True)# Save final combined options filecombined_df_options.to_parquet(combined_options_path, index=False)print(f"✅ Combined options data saved to {combined_options_path}")

2.7 Sector Tagging for Options Data

Cached sector map was loaded, options features merged with sector/industry info.

Code

import pandas as pdfrom pathlib import Path# ========== CONFIG ==========sector_map_path = Path("data/sp500_sector_map.parquet")options_features_path = Path("data/sp500_options_combined.parquet")final_enriched_path = Path("data/sp500_options_combined_enriched.parquet")# ========== STEP 1: Load or Create Sector Map ==========if sector_map_path.exists():print("📂 Loading cached sector map...") sector_map = pd.read_parquet(sector_map_path)else:print("🌐 Scraping sector/industry info from Wikipedia...") wiki_url ="https://en.wikipedia.org/wiki/List_of_S%26P_500_companies" sp500_table = pd.read_html(wiki_url, header=0)[0] sector_map = sp500_table[['Symbol', 'Security', 'GICS Sector', 'GICS Sub-Industry']] sector_map.columns = ['ticker', 'company', 'sector', 'industry'] sector_map['ticker'] = sector_map['ticker'].str.replace('.', '-', regex=False) sector_map = sector_map.drop_duplicates('ticker') sector_map.to_parquet(sector_map_path, index=False)print(f"✅ Sector map saved to {sector_map_path}")# ========== STEP 2: Load Options Features ==========print("📊 Loading options feature file...")df = pd.read_parquet(options_features_path)# ========== STEP 3: Merge Sector Info ==========print("🔗 Merging with sector info...")df = df.merge(sector_map[['ticker', 'sector', 'industry']], on='ticker', how='left')# ========== STEP 4: Save Final Output ==========df.to_parquet(final_enriched_path, index=False)print(f"✅ Final enriched options feature file saved to {final_enriched_path}")

Fundamentals

2.8 S&P 500 Fundamental Financial Data Collection and Computation (2015–2025)

We systematically collected fundamental financial data for S&P 500 companies from 2015 to 2025 using the EOD Historical Data API. For each ticker, yearly balance sheet, income statement, and cash flow metrics were extracted, including net income, EBITDA, revenue, operating income, dividends, assets, liabilities, equity, and shares outstanding.

Using adjusted year-end prices, key metrics such as EPS, market capitalization, P/E ratio, ROE, ROA, operating margin, dividend yield, and debt-to-equity were computed.

Year-over-year EPS growth was also calculated to track profitability trends. We also need to take into account stock splits of companies too.

For example, AAPL last split was on 2020-08-31 with a 4:1 split factor.

Code

import requestsimport jsonEOD_API_KEY ="684483f54bca11.06671973"ticker ="AAPL"url =f"https://eodhistoricaldata.com/api/fundamentals/{ticker}.US"params = {"api_token": EOD_API_KEY}response = requests.get(url, params=params)data = response.json()# Print the split factor and datesplit_info = data.get("SplitsDividends", {})split_factor = split_info.get("LastSplitFactor")split_date = split_info.get("LastSplitDate")print(f"📆 Last Split Date: {split_date}")print(f"🔁 Last Split Factor: {split_factor}")

AAPL shares outstanding (2015–2024) show a steady decline due to buybacks: 2015: 23,172,276,000

2016: 22,001,124,000

2017: 21,006,768,000

2018: 20,000,436,000

2019: 18,595,652,000

2020: 17,528,214,000

2021: 16,864,919,000

2022: 16,325,819,000

2023: 15,812,547,000

2024: 15,408,095,000

Code

import osimport timeimport requestsimport pandas as pdfrom pathlib import Pathfrom datetime import datetime# ========== CONFIG ==========EOD_API_KEY ="684483f54bca11.06671973"ohlcv_df = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")tickers = ohlcv_df["ticker"].drop_duplicates().tolist()fundamentals_dir = Path("data/fundamentals_manual_computed")fundamentals_dir.mkdir(parents=True, exist_ok=True)RATE_LIMIT_SECONDS =1.2def safe_float(val):try:if val in [None, '', '-', 'null', 'None']:returnNonereturnfloat(val)except:returnNone# ========== PRICE LOOKUP ==========ohlcv_df["year"] = ohlcv_df["date"].dt.yearprice_lookup = ( ohlcv_df.groupby(["ticker", "year"])["adjusted_close"] .last() .to_dict())# ========== FUNCTION ==========def get_fundamentals_by_year(ticker, price_lookup=None): url =f"https://eodhistoricaldata.com/api/fundamentals/{ticker}.US" params = {"api_token": EOD_API_KEY}try: response = requests.get(url, params=params) response.raise_for_status() data = response.json() bs_yearly = data.get("Financials", {}).get("Balance_Sheet", {}).get("yearly", {}) is_yearly = data.get("Financials", {}).get("Income_Statement", {}).get("yearly", {}) cs_yearly = data.get("Financials", {}).get("Cash_Flow", {}).get("yearly", {}) rows = []for year_str insorted(is_yearly.keys()): year =int(year_str[:4])ifnot (2015<= year <=2025):continue inc = is_yearly.get(year_str, {}) bs = bs_yearly.get(year_str, {}) cf = cs_yearly.get(year_str, {}) net_income = safe_float(inc.get("netIncome")) ebitda = safe_float(inc.get("ebitda")) revenue = safe_float(inc.get("totalRevenue")) op_income = safe_float(inc.get("operatingIncome")) shares_out = safe_float(bs.get("commonStockSharesOutstanding")) dividends = safe_float(cf.get("dividendsPaid")) gross_profit = safe_float(inc.get("grossProfit")) equity = safe_float(bs.get("totalStockholdersEquity") or bs.get("totalStockholderEquity")) assets = safe_float(bs.get("totalAssets")) liabilities = safe_float(bs.get("totalLiabilities") or bs.get("totalLiab"))# ✅ Use raw values (already split-adjusted by EODHD) adj_price = safe_float(price_lookup.get((ticker, year))) if price_lookup elseNone adj_shares_out = shares_out eps = net_income / adj_shares_out if net_income and adj_shares_out elseNone market_cap = adj_price * adj_shares_out if adj_price and adj_shares_out elseNonetry: roe = net_income / equity if net_income and equity elseNone roa = net_income / assets if net_income and assets elseNone op_margin = op_income / revenue if op_income and revenue elseNone d_to_e = liabilities / equity if liabilities and equity elseNone pe = adj_price / eps if adj_price and eps elseNone div_yield =abs(dividends / adj_price) if dividends and adj_price elseNoneexcept: roe = roa = op_margin = d_to_e = pe = div_yield =None row = {"ticker": ticker,"year": year,"report_date": datetime(year +1, 3, 15),"sector": data.get("General", {}).get("Sector"),"industry": data.get("General", {}).get("Industry"),"updatedAt": data.get("General", {}).get("UpdatedAt"),# Raw"netIncome": net_income,"ebitda": ebitda,"revenue": revenue,"operatingIncome": op_income,"dividendsPaid": dividends,"totalAssets": assets,"totalLiabilities": liabilities,"totalEquity": equity,"sharesOutstanding": adj_shares_out,"price": adj_price,"grossProfit": gross_profit,"grossMargin": gross_profit / revenue if gross_profit and revenue elseNone,# Computed"eps": eps,"marketCap": market_cap,"peRatio": pe,"roe": roe,"roa": roa,"operatingMargin": op_margin,"dividendYield": div_yield,"debtToEquity": round(d_to_e, 4) if d_to_e isnotNoneelseNone } rows.append(row) df = pd.DataFrame(rows)ifnot df.empty: df["epsGrowthYoY"] = df.groupby("ticker")["eps"].pct_change(fill_method=None)return df ifnot df.empty elseNoneexceptExceptionas e:print(f"[ERROR] {ticker}: {e}")returnNone# ========== FETCH LOOP ==========all_data = []for i, ticker inenumerate(tickers):print(f"[{i+1}/{len(tickers)}] Fetching fundamentals for {ticker}...") df = get_fundamentals_by_year(ticker, price_lookup=price_lookup)if df isnotNone: df.to_parquet(fundamentals_dir /f"{ticker}.parquet", index=False) all_data.append(df) time.sleep(RATE_LIMIT_SECONDS)# ========== SAVE COMBINED ==========if all_data: combined_df = pd.concat(all_data, ignore_index=True) combined_df.to_parquet("data/fundamentals_computed_eps_2015_2025.parquet", index=False)print("✅ Saved to data/fundamentals_computed_eps_2015_2025.parquet")else:print("⚠️ No data fetched.")

2.9 Macroeconomic Data

Using FRED data, we compiled six key U.S. economic indicators to track trends relevant for market analysis and financial modeling:

Data Summary:

All series span 2015–2025, with monthly or daily frequency as reported.

Each series is saved individually and combined into a single dataset for integrated analysis.

These indicators provide a foundation for examining inflation, monetary policy, labor markets, liquidity, and economic cycles over the past decade.

Code

from fredapi import Fredimport pandas as pdfrom pathlib import Path# ========== CONFIG ==========FRED_API_KEY ="668705150df9cf1efd80b639bfb558ef"# <-- Replace with your FRED API keyOUTPUT_DIR = Path("data/macro")OUTPUT_DIR.mkdir(parents=True, exist_ok=True)START_DATE ='2015-01-01'END_DATE ='2025-01-01'# ========== INIT FRED ==========fred = Fred(api_key=FRED_API_KEY)# ========== MACRO SERIES TO PULL ==========macro_series = {"CPIAUCSL": "cpi", # Consumer Price Index (All Urban Consumers)"FEDFUNDS": "fed_funds", # Federal Funds Rate"UNRATE": "unemployment", # Unemployment Rate"INDPRO": "industrial_prod", # Industrial Production Index"M2SL": "m2_supply", # Money Supply M2 (Seasonally Adjusted)"T10Y2Y": "yield_curve", # 10Y - 2Y Treasury Yield Spread (recession signal)}# ========== DOWNLOAD & SAVE ==========all_data = []for code, name in macro_series.items():print(f"⬇️ Downloading {name} ({code})...") series = fred.get_series(code, observation_start=START_DATE, observation_end=END_DATE) df = series.reset_index() df.columns = ['date', name] df.to_parquet(OUTPUT_DIR /f"{name}.parquet", index=False) all_data.append(df)# ========== MERGE ALL INTO SINGLE DATAFRAME (optional) ==========macro_df = all_data[0]for df in all_data[1:]: macro_df = pd.merge(macro_df, df, on='date', how='outer')macro_df = macro_df.sort_values("date").reset_index(drop=True)macro_df.to_parquet(OUTPUT_DIR /"macro_combined.parquet", index=False)print("✅ All macro data saved to data/macro/")

3. Layer 1: Alpha Signal Construction

3.1 Forward Return Analysis (S&P 500, 2015–2025)

A key prerequisite for alpha research is the definition of return targets that models will attempt to predict.

To this end, we computed forward returns across multiple horizons:

where \(h\) is the horizon length in trading days.

Returns were calculated separately for each stock by grouping data by ticker.

This ensures that firm-level price dynamics are respected and prevents information leakage across securities.

All forward returns were merged into a horizon-labeled dataset , allowing downstream models to flexibly train and test across different horizons.

Output

Horizon-specific files (1d, 5d, 21d) stored in layer1_alpha_signals/returns/.

A combined dataset aligning all horizons for cross-sectional modeling.

Example progression: if \(Close_{t} = 100\) and \(Close_{t+5} = 103\), then \(\text{fwd\_ret}_{5d} = 0.03\).

Research Implications

The multi-horizon approach allows us to evaluate which types of signals are most effective at which timescales.

Shorter horizons may capture technical inefficiencies and microstructure effects, while longer horizons allow integration of fundamental and macroeconomic drivers.

These return labels serve as the ground truth for supervised learning models , making their definition and accuracy central to the entire research pipeline.

Alpha Signal Construction from Literature

While forward returns provide the prediction target, the real challenge is designing predictive features (alpha signals).

For this, we implemented a library of 101 standardized alpha factors , inspired by WorldQuant’s Alpha101 framework.

Source Reference

The formulas were obtained from the open-source repository WorldQuant Alpha101 Code, which replicates the proprietary Alpha101 formulas described in WorldQuant’s internal research.

The corresponding PDF serves as a theoretical reference, offering descriptions of each alpha, its financial intuition, and expected behavior in different market conditions.

Signal Philosophy

The Alpha101 framework builds signals by combining price, volume, and ranking operations into compact formulas.

For example:

Some alphas exploit mean reversion (e.g., stocks that overreact on one day tend to revert in the next).

Others capture momentum or continuation effects by ranking returns across peers.

Many signals normalize features cross-sectionally, highlighting relative opportunities rather than absolute values.

Implementation

Each alpha is expressed as a single-line formula combining operations like rank, delta (change), ts_mean (time-series mean), and correlation.

This captures whether volume spikes align with price movements, potentially signaling liquidity-driven mispricings.

By replicating all 101 formulas, we created a benchmark library of signals that can be tested individually and in combination.

Research Implications

Using standardized alphas ensures comparability with prior research and allows us to benchmark against established baselines.

These alphas are purely data-driven transformations of market variables, meaning they are portable across regions and asset classes.

Combining them with novel machine learning features later in the pipeline helps us assess whether modern methods genuinely outperform legacy alpha construction.

Code

import pandas as pdfrom pathlib import Path# ========== LOAD OHLCV ==========ohlcv_df = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")ohlcv_df = ohlcv_df.sort_values(["ticker", "date"])# ========== COMPUTE FORWARD RETURNS ==========horizons = [1, 5, 21]for h in horizons: ohlcv_df[f"fwd_ret_{h}d"] = ( ohlcv_df.groupby("ticker")["close"] .transform(lambda x: x.shift(-h) / x -1))# ========== SAVE TO DISK ==========out_dir = Path("layer1_alpha_signals/returns")out_dir.mkdir(parents=True, exist_ok=True)# Save each horizon separatelyfor h in horizons: ohlcv_df[["ticker", "date", f"fwd_ret_{h}d"]].to_parquet( out_dir /f"forward_returns_{h}d.parquet", index=False )# Save all togethercols = ["ticker", "date"] + [f"fwd_ret_{h}d"for h in horizons]ohlcv_df[cols].to_parquet(out_dir /"forward_returns_all.parquet", index=False)print("✅ Forward returns saved to 'layer1_alpha_signals/returns/'")

Code

import numpy as npimport pandas as pdfrom numpy importabsfrom numpy import logfrom numpy import signfrom scipy.stats import rankdata# region Auxiliary functionsdef ts_sum(df, window=10):""" Wrapper function to estimate rolling sum. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return df.rolling(window).sum()def sma(df, window=10):""" Wrapper function to estimate SMA. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return df.rolling(window).mean()def stddev(df, window=10):""" Wrapper function to estimate rolling standard deviation. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return df.rolling(window).std()def correlation(x, y, window=10):""" Wrapper function to estimate rolling corelations. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return x.rolling(window).corr(y)def covariance(x, y, window=10):""" Wrapper function to estimate rolling covariance. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return x.rolling(window).cov(y)def rolling_rank(na):""" Auxiliary function to be used in pd.rolling_apply :param na: numpy array. :return: The rank of the last value in the array. """return rankdata(na)[-1]def ts_rank(df, window=10):""" Wrapper function to estimate rolling rank. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series rank over the past window days. """return df.rolling(window).apply(rolling_rank)def rolling_prod(na):""" Auxiliary function to be used in pd.rolling_apply :param na: numpy array. :return: The product of the values in the array. """return np.prod(na)def product(df, window=10):""" Wrapper function to estimate rolling product. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series product over the past 'window' days. """return df.rolling(window).apply(rolling_prod)def ts_min(df, window=10):""" Wrapper function to estimate rolling min. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series min over the past 'window' days. """return df.rolling(window).min()def ts_max(df, window=10):""" Wrapper function to estimate rolling min. :param df: a pandas DataFrame. :param window: the rolling window. :return: a pandas DataFrame with the time-series max over the past 'window' days. """return df.rolling(window).max()def delta(df, period=1):""" Wrapper function to estimate difference. :param df: a pandas DataFrame. :param period: the difference grade. :return: a pandas DataFrame with today’s value minus the value 'period' days ago. """return df.diff(period)def delay(df, period=1):""" Wrapper function to estimate lag. :param df: a pandas DataFrame. :param period: the lag grade. :return: a pandas DataFrame with lagged time series """return df.shift(period)def rank(df):""" Cross sectional rank :param df: a pandas DataFrame. :return: a pandas DataFrame with rank along columns. """#return df.rank(axis=1, pct=True)return df.rank(pct=True)def scale(df, k=1):""" Scaling time serie. :param df: a pandas DataFrame. :param k: scaling factor. :return: a pandas DataFrame rescaled df such that sum(abs(df)) = k """return df.mul(k).div(np.abs(df).sum())def ts_argmax(df, window=10):""" Wrapper function to estimate which day ts_max(df, window) occurred on :param df: a pandas DataFrame. :param window: the rolling window. :return: well.. that :) """return df.rolling(window).apply(np.argmax) +1def ts_argmin(df, window=10):""" Wrapper function to estimate which day ts_min(df, window) occurred on :param df: a pandas DataFrame. :param window: the rolling window. :return: well.. that :) """return df.rolling(window).apply(np.argmin) +1def decay_linear(df, period=10):""" Linear weighted moving average implementation. :param df: a pandas DataFrame. :param period: the LWMA period :return: a pandas DataFrame with the LWMA. """if df.isnull().values.any(): df.fillna(method='ffill', inplace=True) df.fillna(method='bfill', inplace=True) df.fillna(value=0, inplace=True) na_lwma = np.zeros_like(df.values) na_lwma[:period, :] = df.iloc[:period, :].values na_series = df.values divisor = period * (period +1) /2 y = (np.arange(period) +1) *1.0/ divisorfor row inrange(period -1, df.shape[0]): x = na_series[row - period +1: row +1, :] na_lwma[row, :] = (np.dot(x.T, y))return pd.DataFrame(na_lwma, index=df.index, columns=df.columns)# endregion

import pandas as pdimport numpy as npfrom pathlib import Path# Alpha generation wrapperdef get_alpha(df): stock = Alphas(df) alpha_df = pd.DataFrame(index=df.index)for i in [1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20,21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40,41, 42, 43, 44, 45, 46, 47, 49, 50, 51, 52, 53, 54, 55, 57, 60, 61, 62, 64, 65,66, 68, 71, 72, 73, 74, 75, 77, 78, 81, 83, 84, 85, 86, 88, 92, 94, 95, 96, 98, 99, 101 ]: method_name =f'alpha{i:03d}'try: func =getattr(stock, method_name) alpha_df[method_name] = func()exceptExceptionas e:print(f"❌ {method_name} failed: {e}") alpha_df[method_name] = np.nanreturn alpha_df# Load enriched OHLCV dataohlcv_df = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")ohlcv_df = ohlcv_df.sort_values(by=["ticker", "date"])# Create output directoryalpha_dir = Path("layer1_alpha_signals/alphas")alpha_dir.mkdir(parents=True, exist_ok=True)# Loop through each tickertickers = ohlcv_df["ticker"].unique()for ticker in tickers: df_ticker = ohlcv_df[ohlcv_df["ticker"] == ticker].copy()# Ensure necessary columns exist required_cols = ['open', 'high', 'low', 'close', 'volume', 'adjusted_close']ifnotall(col in df_ticker.columns for col in required_cols):print(f"⚠️ Skipping {ticker} due to missing required OHLCV columns")continue# Drop NaNs from required columns (to avoid early rolling/pct_change NaNs) df_ticker = df_ticker.dropna(subset=required_cols)# Skip if too shortiflen(df_ticker) <60: # or whatever minimum is needed for long rolling alphasprint(f"⚠️ Skipping {ticker} due to insufficient data")continuetry:# Compute alphas df_alpha = get_alpha(df_ticker)# Add back date and ticker for reference df_alpha["date"] = df_ticker["date"].values df_alpha["ticker"] = df_ticker["ticker"].values# Save to file df_alpha.to_parquet(alpha_dir /f"{ticker}.parquet", index=False)print(f"✅ Saved alphas for {ticker}")exceptExceptionas e:print(f"❌ Failed to compute alphas for {ticker}: {e}")

3.3 Consolidation of Decade-Long Alpha Signals for Market Analysis

The process began quietly, as the system scanned the folder for alpha files. Each file held a snapshot of signals for different stocks on different dates, tiny pieces of a much larger puzzle.

One by one, the files were read, and their contents collected into a growing set. Soon, all these fragments were stitched together into a massive matrix where each row represented a stock on a specific day, and each column captured a different alpha signal.

The combined dataset contained thousands of rows and over 80 signal columns , each reflecting subtle or pronounced market movements.

Some entries were missing (NaN), but most cells held numerical values ranging from small fluctuations to larger spikes, both positive and negative.

The data spanned from early 2015 to mid-2025 , providing a decade-long view of market activity for tickers like AAPL.

Each alpha signal offered insight into market trends, with some highlighting abrupt changes while others traced more subtle patterns.

Once the assembly was complete, the full matrix was saved as a single consolidated file. This output preserved the story of all individual alpha signals over time, ready for modeling or further analysis, ensuring that no piece of the decade-long market narrative was lost.

Code

import pandas as pdfrom pathlib import Pathalpha_dir = Path("layer1_alpha_signals/alphas")all_files =list(alpha_dir.glob("*.parquet"))df_list = []for f in all_files: df = pd.read_parquet(f) df_list.append(df)# Concatenate into one giant alpha matrixcombined_alphas = pd.concat(df_list, ignore_index=True)# Save for modeling/labelingcombined_alphas.to_parquet("layer1_alpha_signals/all_alphas_combined.parquet", index=False)print("✅ Combined all alpha files.")

3.4 FINBERT

The journey began with a list of tickers, each representing a company in the S&P 500. For each ticker, the system reached out to the vast sea of Finnhub news, gathering stories from the past year.

The goal was simple yet ambitious: capture the pulse of the market through headlines and summaries, but without overwhelming the system—only the most recent 20 articles per company were retained.

Over 500 tickers, the scraper moved steadily, pausing slightly between requests to stay polite to the API.

Each article recorded included the date of publication, headline, summary, source, and URL, preserving the context and origin.

The data was carefully sorted by ticker and date, ensuring the most recent news appeared first.

The data was carefully sorted by ticker and date, ensuring the most recent news appeared first.

In total, nearly 10,000 headlines were collected, representing a year of market-relevant information for the entire S&P 500.

The resulting dataset was saved as a single file, a ready-to-use repository of market sentiment. It became a chronicle of corporate news, capturing the stories that could sway investor decisions and shape alpha signals in the market.

Code

import requestsimport pandas as pdfrom datetime import datetime, timedeltafrom tqdm import tqdmimport timeimport os# === Your Finnhub API key ===API_KEY ="d1n7fkhr01qovv8hh6a0d1n7fkhr01qovv8hh6ag"# === Load tickers from OHLCV file ===df_ohlcv = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")sp500_tickers = ( df_ohlcv["ticker"] .dropna() .astype(str) .str.strip() .unique() .tolist())# Create the directory if it doesn't existos.makedirs("layer1_alpha_signals/sentiment", exist_ok=True)# === News collection setup ===cutoff_date = datetime.today() - timedelta(days=365)news_data = []# === Loop through tickers ===for ticker in tqdm(sp500_tickers, desc="Fetching Finnhub news"): url = (f"https://finnhub.io/api/v1/company-news"f"?symbol={ticker}"f"&from={cutoff_date.strftime('%Y-%m-%d')}"f"&to={datetime.today().strftime('%Y-%m-%d')}"f"&token={API_KEY}" )try: response = requests.get(url) response.raise_for_status() articles = response.json() count =0for item in articles:if"headline"in item and"datetime"in item: pub_date = datetime.utcfromtimestamp(item["datetime"])if pub_date >= cutoff_date: news_data.append({"date": pub_date,"ticker": ticker,"headline": item["headline"],"summary": item.get("summary", ""),"source": item.get("source", ""),"url": item.get("url", "") }) count +=1if count >=20:break# ✅ Cap at 20 per ticker time.sleep(1) # Throttle to stay under 60 calls/minexceptExceptionas e:print(f"⚠️ Failed for {ticker}: {e}")# === Convert to DataFrame and Save ===df_news = pd.DataFrame(news_data)df_news = df_news.sort_values(by=["ticker", "date"], ascending=[True, False])df_news.to_parquet("layer1_alpha_signals/sentiment/raw_news_finnhub.parquet", index=False)print(f"✅ Collected {len(df_news)} headlines from Finnhub.")

Over the course of several hours, each of the nearly 10,000 collected headlines was carefully examined for its emotional tone. Using FinBERT, the system treated each headline as a small story tied to a company and its ticker, interpreting the market sentiment embedded in the words.

Headlines were filtered to ensure only valid, meaningful text was analyzed.

For every entry, the model assigned three sentiment scores—negative, neutral, and positive—quantifying the tone of each article in a structured way.

The processing was methodical, moving headline by headline, capturing subtle nuances in market language and framing it numerically for analysis.

In the end, the sentiment scores were consolidated into a single dataset, giving a, timestamped map of market mood across all S&P 500 companies.

This resource became a quantitative lens on market psychology, ready for further modeling, strategy development, or historical analysis.

The process unfolded like a exploration of each company’s price history. Every S&P 500 stock was treated as its own timeline, where daily prices revealed patterns and fluctuations.

Log returns captured day-to-day percentage changes, forming the basis for all subsequent measures.

Volatility over a 21-day window reflected the intensity of recent price swings, highlighting periods of calm and turbulence.

RSI quantified momentum, showing when a stock was overbought or oversold over a 14-day horizon.

MACD and its signal line offered insight into trend direction and shifts, exposing subtle market turns.

Bollinger Bands framed the price action, marking statistical bounds of recent movement and helping identify extremes.

Once computed for all tickers, the indicators were consolidated into a comprehensive dataset. The result was a high-resolution map of technical signals across the market, ready to feed models or inform trading strategies. The workflow was precise, consistent, and captured the rhythm of market behavior over time.

Code

import pandas as pdimport numpy as npfrom tqdm import tqdmimport os# Ensure output directory existsos.makedirs("layer1_alpha_signals/technicals", exist_ok=True)# Load OHLCV datadf_ohlcv = pd.read_parquet("data/sp500_ohlcv_enriched.parquet")df_ohlcv = df_ohlcv.sort_values(by=["ticker", "date"]).reset_index(drop=True)# Container for featurestechnical_features = []# Calculate indicators per tickerfor ticker, df in tqdm(df_ohlcv.groupby("ticker"), desc="Computing Technical Indicators"): df = df.copy() df["log_return"] = np.log(df["adjusted_close"] / df["adjusted_close"].shift(1))# 21-day rolling volatility df["volatility_21d"] = df["log_return"].rolling(window=21).std()# RSI (14-day) delta = df["adjusted_close"].diff() gain = (delta.where(delta >0, 0)).rolling(14).mean() loss = (-delta.where(delta <0, 0)).rolling(14).mean() rs = gain / loss df["rsi_14"] =100- (100/ (1+ rs))# MACD (12/26 EMA) + Signal (9 EMA of MACD) ema_12 = df["adjusted_close"].ewm(span=12, adjust=False).mean() ema_26 = df["adjusted_close"].ewm(span=26, adjust=False).mean() df["macd"] = ema_12 - ema_26 df["macd_signal"] = df["macd"].ewm(span=9, adjust=False).mean()# Bollinger Bands (20-day) sma_20 = df["adjusted_close"].rolling(window=20).mean() std_20 = df["adjusted_close"].rolling(window=20).std() df["bb_upper"] = sma_20 +2* std_20 df["bb_middle"] = sma_20 df["bb_lower"] = sma_20 -2* std_20# Keep only required columns technical_features.append( df[["date", "ticker", "volatility_21d", "rsi_14", "macd", "macd_signal", "bb_upper", "bb_middle", "bb_lower"]] )# Concatenate all tickersdf_technicals = pd.concat(technical_features, axis=0).reset_index(drop=True)# Save to parquetdf_technicals.to_parquet("layer1_alpha_signals/technicals/technicals.parquet", index=False)print("✅ Saved technical indicators.")

3.7 Daily Options Market Metrics for S&P 500 Stocks

The analysis distilled option market activity into a set of concise, actionable metrics for each S&P 500 stock on each trading day:

Total volume and open interest captured overall trading intensity.

Call/put ratios in volume and open interest highlighted directional sentiment.

Implied volatility (IV) mean and skew measured market expectations and asymmetry around the median strike.

Max OI strike relative to median pinpointed the price level attracting the most positioning.

These engineered features compress the complexity of the options market into a daily snapshot per ticker, providing a clear, quantifiable view of trader behavior and market expectations.

The resulting dataset is saved and ready to integrate with price, technical, and sentiment data for downstream modeling or strategy analysis.

Fundamental metrics for S&P 500 firms—PE, ROE, ROA, EPS, growth, leverage, margins, dividends—were cross-sectionally ranked and z-scored per report date.

The processed dataset provides standardized, comparable signals across tickers, ready for integration with market, technical, and sentiment data.

Code

import pandas as pdimport numpy as npimport osfrom scipy.stats import zscore# ✅ Ensure output directory existsos.makedirs("layer1_alpha_signals/fundamentals", exist_ok=True)# ✅ Load the fundamentals datasetdf_fund = pd.read_parquet("data/fundamentals_computed_eps_2015_2025.parquet")# ✅ Drop missing tickers or report datesdf_fund = df_fund.dropna(subset=["ticker", "report_date"])# ✅ Select fundamental features to engineerfundamental_cols = ["peRatio", "roe", "roa", "eps", "epsGrowthYoY", "marketCap","debtToEquity", "operatingMargin", "dividendYield"]# ✅ Drop rows with too many missing values (e.g., less than 3 valid metrics)df_fund = df_fund[df_fund[fundamental_cols].notnull().sum(axis=1) >=3]# ✅ Normalize cross-sectionally per report dateresults = []for report_date, group in df_fund.groupby("report_date"): df = group.copy()for col in fundamental_cols:if col in df.columns: df[f"{col}_rank"] = df[col].rank(pct=True) df[f"{col}_z"] = zscore(df[col].fillna(df[col].mean())) results.append(df)# ✅ Combine and sortdf_final = pd.concat(results).sort_values(["ticker", "report_date"])# ✅ Save the final outputdf_final.to_parquet("layer1_alpha_signals/fundamentals/fundamental_signals.parquet", index=False)print("✅ Saved fundamental alpha signals.")

Code

df_final[df_final['ticker']=='AAPL']

3.9 Separate into Features and Target

The datasets are now fully aligned and clean: all date and report_date fields are proper datetime types with no missing values, ensuring reliable merging or time-based analysis.

X (market/technical data) is daily-sorted per ticker, while fundamentals is sorted by report date, reflecting quarterly/annual updates.

This confirms a clear temporal structure: daily prices exist between periodic fundamental updates, allowing forward-looking integration of fundamental signals into market data without gaps or misalignment.

Code

# Ensure date typesX["date"] = pd.to_datetime(X["date"])fundamentals["report_date"] = pd.to_datetime(fundamentals["report_date"])# Check for nullsprint("Nulls in date:", X["date"].isnull().sum())print("Nulls in report_date:", fundamentals["report_date"].isnull().sum())# Double-check dtypesprint("X['date'] dtype:", X["date"].dtype)print("fundamentals['report_date'] dtype:", fundamentals["report_date"].dtype)# Sort again just to be 100% sureX = X.sort_values(["ticker", "date"]).reset_index(drop=True)fundamentals = fundamentals.sort_values(["ticker", "report_date"]).reset_index(drop=True)# Check orderprint("\nFirst few rows of X:")print(X[["ticker", "date"]].head())print("\nFirst few rows of fundamentals:")print(fundamentals[["ticker", "report_date"]].head())

3.10 Comprehensive Multi-Layered Dataset Integration for S&P 500 Stocks

We integrated all layers of signals into a single coherent dataset. Daily returns serve as the backbone (X), onto which we merged fast-moving signals—alphas, news sentiment, technicals, and options—preserving exact (date, ticker) alignment.

Fundamentals, being slower-moving, were merged by ticker and filtered to only include reports available on or before each trading day, ensuring a realistic forward-looking setup.

Finally, for days with multiple fundamental reports, we kept only the latest available per (ticker, date).

The result is a fully merged dataset ready for modeling, combining daily market dynamics with option, sentiment, technical, and fundamental insights.

3.11 Alpha Signal Merging and Forward Returns Alignment

This part outlines the process of preparing the Layer 1 dataset by merging various alpha signals and aligning them with forward returns for downstream modeling.

Code

# ───────────────────────────────# 1. Load and align raw forward returns# ───────────────────────────────# Keep only the forward returnsY = returns[["date", "ticker", "fwd_ret_1d", "fwd_ret_5d", "fwd_ret_21d"]]# Merge with `merged` to align with your feature dataY = merged[["date", "ticker"]].merge(Y, on=["date", "ticker"], how="left")# Sort and cleanY["date"] = pd.to_datetime(Y["date"])Y = Y.sort_values(["date", "ticker"]).reset_index(drop=True)# ───────────────────────────────# 2. Save full Y# ───────────────────────────────Y.to_parquet("layer1_alpha_signals/merged/Y_raw_returns.parquet", index=False)Y.to_csv("layer1_alpha_signals/merged/Y_raw_returns.csv", index=False)# ───────────────────────────────# 3. Create train/test split# ───────────────────────────────Y_train = Y[Y["date"].dt.year <=2024].reset_index(drop=True)Y_test = Y[Y["date"].dt.year ==2025].reset_index(drop=True)# Optional: Save these splits tooY_train.to_parquet("layer1_alpha_signals/merged/Y_train.parquet", index=False)Y_test.to_parquet("layer1_alpha_signals/merged/Y_test.parquet", index=False)

3.12 Feature Engineering

First, we isolated 2025 as a “holdout” period, saving both features (X_2025) and targets (Y_2025) untouched to preserve a realistic forward-looking test set. This ensures any future modeling can be validated on truly unseen data.

Next, for general modeling, we cleaned the feature set by dropping options- and sentiment-related columns—features that are either specialized, high-frequency, or text-based—leaving a stable cross-sectional set of alphas, fundamentals, and technicals.

We then assessed missingness across features. Most features are complete, but some fundamental metrics like dividendYield and epsGrowthYoY have ~10–13% missing values, while a few alphas and market features show minor gaps (<5%). This provides a clear roadmap for imputation or feature selection, balancing data completeness with predictive richness.

In short, the dataset is now ready for modeling, with a clean general-purpose feature set and a separate untouched 2025 set for robust evaluation.

Code

# 🔐 STEP 1 — Save the untouched 2025 subset FIRST (preserving all columns)X_2025 = merged[merged["date"].dt.year ==2025]Y_2025 = Y[Y["date"].dt.year ==2025]X_2025.to_parquet("layer1_alpha_signals/merged/X_2025_signals.parquet", index=False)Y_2025.to_parquet("layer1_alpha_signals/merged/Y_2025_returns.parquet", index=False)# 🧼 STEP 2 — Now drop options + sentiment features for general modelingdrop_cols = [ col for col in merged.columnsif ( col.startswith("iv_") or col.startswith("option_") or col.startswith("call_put_ratio") or col.startswith("max_oi") or col.startswith("finbert_") or col in ["headline", "company"] )]X_model_clean = merged.drop(columns=drop_cols)

3.13 3-Level Median Imputation

We began by sorting the dataset by ticker and report date, ensuring that every step respects the natural flow of time. This is critical because any leakage of future information would distort our analysis.

Next, we addressed missing values in key fundamental features such as dividendYield, epsGrowthYoY, and dividendsPaid. Instead of a simple fill, we adopted a hierarchical, time-aware strategy: we first looked at the company’s own history, filling gaps with the expanding median of past values.

Where data was still missing, we leveraged broader groups — first the industry, then the sector, and finally, if necessary, the global median.

This approach ensures that imputations are grounded in relevant historical and peer information, rather than arbitrary guesses.

For alpha signals, which are meant to capture deviations from typical behavior, we used a conservative default of 0 wherever missing. This prevents introducing artificial signals that could bias our models.

Valuation metrics (price, peRatio, marketCap) were treated with a forward-fill approach, backed up by an expanding median to ensure no future information is used. This allows us to retain as much data as possible while maintaining a strict temporal structure.

After completing this imputation process, the dataset became much cleaner. Most missing values were in rank-normalized fundamentals (dividendYield_rank ~13%, epsGrowthYoY_rank ~10%), but the majority of technical, alpha, and categorical features were now complete.

In essence, we transformed a partially incomplete dataset into a robust, temporally consistent, and leakage-free foundation ready for modeling, preserving both historical fidelity and cross-sectional structure.

Code

# Step 1: Sort to ensure time orderX_model_clean = X_model_clean.sort_values(["ticker", "report_date"]).copy()# Step 2: Define safe ticker-level expanding mediandef time_safe_group_median(df, group_col, target_col):return ( df.groupby(group_col, group_keys=False) .apply(lambda g: g[target_col].expanding().median().shift()) )# Step 3: Update group_order to start with tickergroup_order = [ ("ticker", lambda df, col: df[col].fillna(time_safe_group_median(df, "ticker", col))), ("industry", lambda df, col: df[col].fillna(time_safe_group_median(df, "industry", col))), ("sector", lambda df, col: df[col].fillna(time_safe_group_median(df, "sector", col))), ("global", lambda df, col: df[col].fillna(df.sort_values("report_date")[col].expanding().median().shift()))]# Step 4: Apply the hierarchical imputationfor col in ["dividendYield", "epsGrowthYoY", "dividendsPaid"]:if col notin X_model_clean.columns:continuefor _, imputer in group_order: X_model_clean[col] = imputer(X_model_clean, col)

Code

alpha_cols = [col for col in X_model_clean.columns if col.startswith("alpha")]for col in alpha_cols:if X_model_clean[col].isnull().sum() >0:# Conservative default: fill with 0 (alpha-neutral) X_model_clean[col] = X_model_clean[col].fillna(0)

Code

def leakage_free_ffill_then_median(df, col, time_col):# Sort by ticker and appropriate time column df = df.sort_values(["ticker", time_col]).copy()# Ticker-wise forward fill ffilled = df.groupby("ticker")[col].transform(lambda x: x.ffill())# Expanding median fallback (only past values) fallback = df[col].expanding().median().shift()return ffilled.fillna(fallback)# Define the valuation features and which date column to use for eachvaluation_cols = ["price", "peRatio", "marketCap"]date_mapping = {"price": "date","peRatio": "report_date","marketCap": "date"}# Apply the imputationfor col in valuation_cols:if col in X_model_clean.columns: time_col = date_mapping[col] X_model_clean[col] = leakage_free_ffill_then_median(X_model_clean, col, time_col)

3.14 Missing Data Analysis After Hierarchical Imputation and Forward-Filling

After completing hierarchical, time-aware imputation and forward-filling, we examined the remaining missingness across features to understand the dataset’s final quality.

The most affected features remain the rank-normalized fundamentals: dividendYield_rank shows the highest missing proportion with 144,912 missing entries, followed by epsGrowthYoY_rank (120,981 missing) and its standardized variant epsGrowthYoY_z (119,849 missing).

These gaps are expected, reflecting the inherent sparsity in dividend and earnings growth reporting across companies and time.

In contrast, market-based rank features such as marketCap_rank and peRatio_rank are nearly complete, with only 4,685 missing values each, indicating that valuation data is largely available.

Technical indicators also demonstrate high completeness: volatility_21d has 546 missing, Bollinger Bands (bb_lower, bb_middle, bb_upper) have 494 each, and rsi_14 shows only 488 missing.

3.15 Feature Distribution and Skew Analysis After Imputation and Standardization

We performed forward-fill and time-aware median imputation for technical indicators (volatility_21d, rsi_14, Bollinger Bands), ensuring that only past information contributes to each row.

Fundamental and market features were standardized (_z) and rank-normalized (_rank) either by report_date or date to preserve cross-sectional comparability.

Examining the distributional skew across numeric features reveals extreme positive skew in certain alpha signals (alpha009: 101.5, alpha052: 61.9) and financial ratios (peRatio: 54.4, roe: 21.1), reflecting the heavy-tailed nature of stock-level factors and earnings metrics. Technical indicators and market measures like bb_lower, bb_middle, bb_upper, and price also exhibit substantial right-skew (10–15 range), consistent with volatility clustering and outlier price movements.

Conversely, a small set of features shows mild negative skew (alpha038: -0.93, grossMargin: -1.33, roe_z: -1.58), indicating left-leaning distributions, often reflecting capped or bounded ratios. Several alpha features remain NaN due to lack of data or inapplicability across certain stocks.

Overall, these results highlight heterogeneous distributions, emphasizing the need for robust scaling and normalization in downstream modeling, particularly for highly skewed alpha signals and earnings-based fundamentals.

Code

def ffill_then_time_median(df, col): df = df.sort_values(["ticker", "date"]).copy()# Safe forward fill per ticker ffilled = df.groupby("ticker")[col].transform(lambda x: x.ffill())# Fallback: expanding global median up to that point (time-safe) fallback = df[col].expanding().median().shift()return ffilled.fillna(fallback)# Apply to technical indicator columnsfor col in ['volatility_21d', 'rsi_14', 'bb_upper', 'bb_middle', 'bb_lower']:if col in X_model_clean.columns: X_model_clean[col] = ffill_then_time_median(X_model_clean, col)

Code

report_date_cols = ["dividendYield", "epsGrowthYoY", "peRatio"]date_cols = ["marketCap"]# Group by report_date for fundamentalsfor col in report_date_cols:if col in X_model_clean.columns: X_model_clean[f"{col}_z"] = ( X_model_clean.groupby("report_date")[col] .transform(lambda x: (x - x.mean()) / x.std(ddof=0)) ) X_model_clean[f"{col}_rank"] = ( X_model_clean.groupby("report_date")[col] .transform(lambda x: x.rank(pct=True)) )# Group by date for market-based featuresfor col in date_cols:if col in X_model_clean.columns: X_model_clean[f"{col}_z"] = ( X_model_clean.groupby("date")[col] .transform(lambda x: (x - x.mean()) / x.std(ddof=0)) ) X_model_clean[f"{col}_rank"] = ( X_model_clean.groupby("date")[col] .transform(lambda x: x.rank(pct=True)) )

3.16 Data Transformation and Dimensionality Reduction

The cleaned dataset was divided into training (up to 2024) and testing (2025) subsets, yielding 1,085,402 and 53,500 observations, respectively. To prepare the features for predictive modeling, we applied separate pipelines tailored for linear and tree-based models.

Linear Pipeline: Log-Scaling and PCA

For features exhibiting extreme skew, we applied log-transformation (log1p) to reduce the influence of outliers. Alpha signals were clipped to a conservative range (±1e6) to remove unrealistic spikes while preserving relative rankings.

Subsequently, the alpha feature set underwent standard scaling and Principal Component Analysis (PCA), producing 10 orthogonal components that capture the dominant modes of cross-sectional variation in the signals.

After this procedure, the linear training and testing datasets each contained 150 features, which include both the original scaled inputs and the PCA-derived components.

This dimensionality reduction mitigates collinearity among alpha factors while retaining most of the systematic variation for modeling.

Tree Pipeline: Adaptive Clipping

For tree-based models, which are robust to monotonic transformations, we implemented an adaptive clipping strategy. Ultra-skewed features (skew > 100) were capped at the 0.5th and 99.5th percentiles, while moderately skewed features (1 < skew ≤ 100) were capped at the 0.1st and 99.9th percentiles.

This approach retains the non-linear structure of the predictors while eliminating extreme outliers that could destabilize tree splits. The resulting tree-ready datasets consist of 140 features, reflecting the preserved numeric variables after clipping and metadata removal.

Feature and Dataset Summary

Training set sizes:

Linear: 1,085,402 × 150 features

Tree: 1,085,402 × 140 features

Testing set sizes:

Linear: 53,500 × 150 features

Tree: 53,500 × 140 features

These preprocessing steps ensure that both linear and tree-based models receive well-conditioned, scale-consistent inputs, with PCA compressing redundant alpha signals for linear models and adaptive clipping controlling extreme values for tree-based models.

The approach balances the need to reduce noise and outlier impact while retaining informative cross-sectional patterns essential for predictive performance.

Code

import numpy as npimport pandas as pdfrom sklearn.decomposition import PCAfrom sklearn.preprocessing import StandardScaler# ───────────────────────────────# 0. Base setup# ───────────────────────────────X_base = X_model_clean.copy()# One-hot encode 'sector'X_base = pd.get_dummies(X_base, columns=["sector"], drop_first=True)# Frequency encode 'industry'industry_freq = X_base["industry"].value_counts(normalize=True)X_base["industry_freq"] = X_base["industry"].map(industry_freq)X_base.drop(columns=["industry"], inplace=True)# Identify numeric columns (excluding metadata)ignore_cols = ["ticker", "date", "year", "report_date", "updatedAt"]numeric_cols = X_base.select_dtypes(include=[np.number]).columns.difference(ignore_cols)# Detect skewed columnsskew_threshold =1.0skewness = X_base[numeric_cols].skew()highly_skewed = skewness[abs(skewness) > skew_threshold].index.tolist()# Identify alpha featuresalpha_cols = [col for col in X_base.columns if col.startswith("alpha") and col in numeric_cols]# ───────────────────────────────# 1. Split into train/test first# ───────────────────────────────X_base_train = X_base[X_base["date"].dt.year <=2024].copy()X_base_test = X_base[X_base["date"].dt.year ==2025].copy()# ───────────────────────────────# 2. Linear version: log1p + PCA# ───────────────────────────────def process_linear(df): df = df.copy()for col in highly_skewed:if col in df.columns: df[col] = np.log1p(df[col].clip(lower=0)) df[alpha_cols] = df[alpha_cols].clip(-1e6, 1e6).replace([np.inf, -np.inf], np.nan).fillna(0) scaler = StandardScaler() scaled_alpha = scaler.fit_transform(df[alpha_cols]) pca = PCA(n_components=10) pca_components = pca.fit_transform(scaled_alpha)for i inrange(10): df[f"alpha_pca_{i+1}"] = pca_components[:, i]return dfX_linear_train = process_linear(X_base_train)X_linear_test = process_linear(X_base_test)# ───────────────────────────────# 3. Tree version: adaptive clipping# ───────────────────────────────def process_tree(df): df = df.copy() skew = df[highly_skewed].skew() ultra_skewed = [col for col in skew.index if skew[col] >100and df[col].nunique() >10] moderately_skewed = [col for col in skew.index if1< skew[col] <=100and df[col].nunique() >10]for col in ultra_skewed: lower, upper = df[col].quantile([0.005, 0.995]) df[col] = df[col].clip(lower, upper)for col in moderately_skewed: lower, upper = df[col].quantile([0.001, 0.999]) df[col] = df[col].clip(lower, upper)return dfX_tree_train = process_tree(X_base_train)X_tree_test = process_tree(X_base_test)# ───────────────────────────────# 4. Drop unused metadata columns# ───────────────────────────────drop_cols = ["year", "report_date", "updatedAt", "date"]X_linear_train.drop(columns=[col for col in drop_cols if col in X_linear_train], inplace=True)X_linear_test.drop(columns=[col for col in drop_cols if col in X_linear_test], inplace=True)X_tree_train.drop(columns=[col for col in drop_cols if col in X_tree_train], inplace=True)X_tree_test.drop(columns=[col for col in drop_cols if col in X_tree_test], inplace=True)print("✅ Final shapes:")print(f"X_linear_train: {X_linear_train.shape}, X_linear_test: {X_linear_test.shape}")print(f"X_tree_train: {X_tree_train.shape}, X_tree_test: {X_tree_test.shape}")

3.17 Data Cleaning and Finalization

After feature engineering, all potential leakage columns (return_1d, return_5d, return_21d, ticker, date, year, report_date, updatedAt) were checked. Only ticker remained in the datasets and was dropped across all train and test splits.

Next, all datasets were screened for infinite values. While the linear datasets contained none, the tree datasets had +inf/-inf in a few alpha features (alpha040, alpha083, alpha084). These values were replaced with 0, ensuring numeric stability. Post-replacement checks confirmed no remaining infinities.

Finally, the cleaned datasets were saved to .feather files for efficient storage and reloaded for modeling.

Outcome:

Linear datasets: X_linear_train (1,085,402 × 149), X_linear_test (53,500 × 149)

Tree datasets: X_tree_train (1,085,402 × 140), X_tree_test (53,500 × 140)

All leakage and infinite values handled, ready for model training.

Code

# Step 1: List suspicious columns to dropleakage_cols = ["return_1d", "return_5d", "return_21d","ticker", "date", "year", "report_date", "updatedAt"]# Step 2: Check which of these are still presentleakage_in_X_linear_train = [col for col in leakage_cols if col in X_linear_train.columns]leakage_in_X_tree_train = [col for col in leakage_cols if col in X_tree_train.columns]leakage_in_X_linear_test = [col for col in leakage_cols if col in X_linear_test.columns]leakage_in_X_tree_test = [col for col in leakage_cols if col in X_tree_test.columns]# Step 3: Print resultsprint("🚨 Leakage columns in X_linear_train:", leakage_in_X_linear_train)print("🚨 Leakage columns in X_tree_train:", leakage_in_X_tree_train)print("🚨 Leakage columns in X_linear_test:", leakage_in_X_linear_test)print("🚨 Leakage columns in X_tree_test:", leakage_in_X_tree_test)

Code

# 🔻 Drop 'ticker' column if present in any of the datasetsfor df_name, df in {"X_linear_train": X_linear_train,"X_linear_test": X_linear_test,"X_tree_train": X_tree_train,"X_tree_test": X_tree_test}.items():if"ticker"in df.columns: df.drop(columns=["ticker"], inplace=True)print(f"🧹 Dropped 'ticker' from {df_name}")

Code

import numpy as np# 🔍 Check for inf/-inf values in all X datasetsfor name, df in {"X_linear_train": X_linear_train,"X_linear_test": X_linear_test,"X_tree_train": X_tree_train,"X_tree_test": X_tree_test}.items(): inf_cols = [col for col in df.columns if df[col].isin([np.inf, -np.inf]).any()]if inf_cols:print(f"🚨 Columns with inf/-inf in {name}: {inf_cols}")else:print(f"✅ No inf/-inf values found in {name}")

Code

import numpy as np# Replace +inf and -inf with 0 in both train and test setsX_tree_train = X_tree_train.replace([np.inf, -np.inf], 0)X_tree_test = X_tree_test.replace([np.inf, -np.inf], 0)print("✅ Replaced +inf and -inf values in X_tree_train and X_tree_test with 0")

Code

# ───────────────────────────────# Save final train/test splits# ───────────────────────────────X_linear_train.reset_index(drop=True).to_feather("X_linear_train.feather")X_linear_test.reset_index(drop=True).to_feather("X_linear_test.feather")X_tree_train.reset_index(drop=True).to_feather("X_tree_train.feather")X_tree_test.reset_index(drop=True).to_feather("X_tree_test.feather")print("💾 Saved: X_linear_train.feather, X_linear_test.feather, X_tree_train.feather, X_tree_test.feather")

4. Layer 2: Alpha Modeling

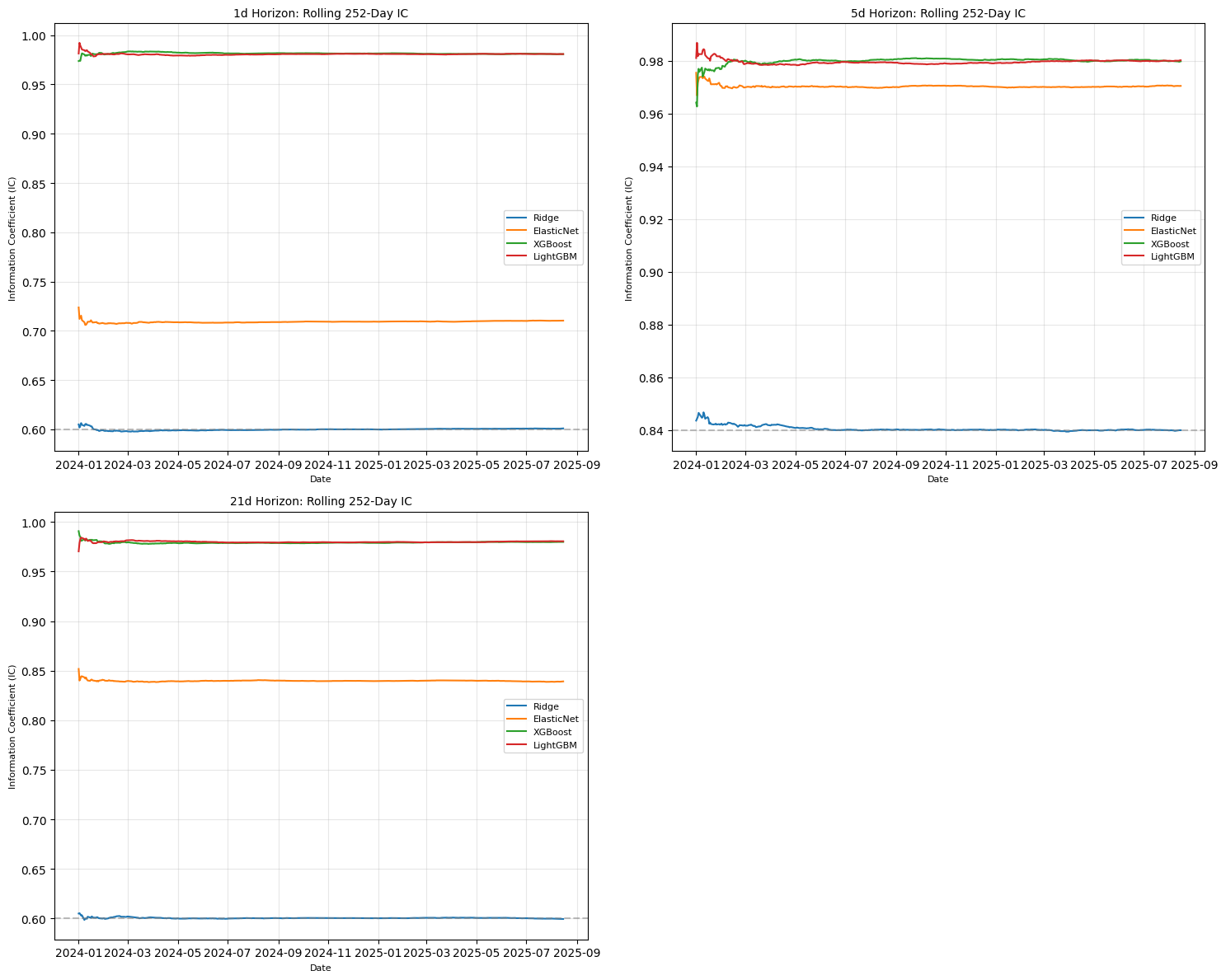

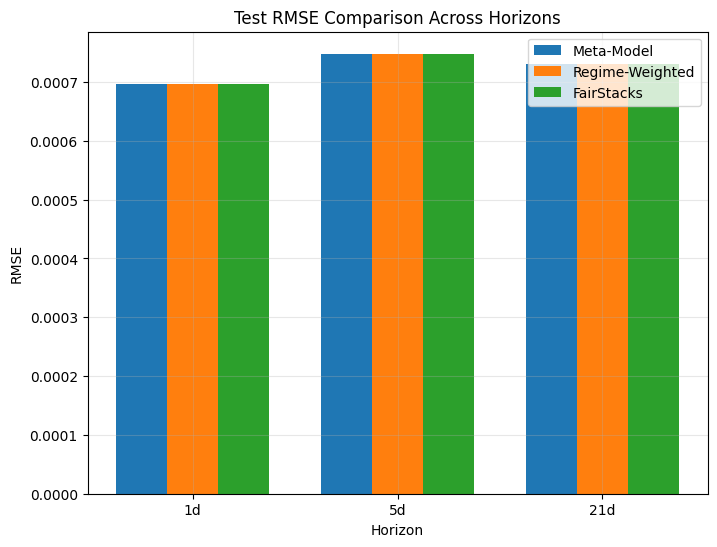

4.1 Feature Pruning – Finding the Signals That Matter

We began with everything—hundreds of potential features for each prediction horizon (1-day, 5-day, 21-day)—but only a fraction would truly help forecast returns.

The goal was clear: start wide, then keep only what’s worth listening to.

Information Coefficient (IC) Filtering

Measured how each feature aligned with future returns using Spearman’s rank correlation calculated daily.

Identified features whose ups and downs moved in step with the market.

Model-Based Feature Importance

Ridge and ElasticNet regressions (with date-grouped 5-fold CV) ranked features by coefficient strength.

XGBoost provided SHAP values, revealing how much each feature influenced predictions.

Feature Selection Strategy

For each method, features were ranked and retained until they covered 95% of total importance.

Linear models: combined IC, Ridge, and ElasticNet winners.

Tree models: combined IC and SHAP results.

The streamlined datasets—saved as Parquet files like X_linear_1d.parquet—were leaner, faster to train, and laser-focused on the true drivers of returns.

4.2 Predicting 5-Day Forward Returns Using IC- and SHAP-Guided Ridge Regression

We aimed to predict 5-day forward returns using a combination of statistical and model-driven feature selection followed by Ridge regression with cross-validation.

The target variable, fwd_ret_5d, was first filtered to remove missing values, ensuring that both training and test datasets contained only valid observations.

Dates were retained to facilitate grouped cross-validation, preventing temporal leakage and preserving the natural order of the data. Initial feature selection was based on the absolute Spearman correlation (IC) between each feature and the target.

Features contributing cumulatively to 95% of the total IC were retained, capturing those with the strongest linear relationship to future returns. To complement this linear perspective, we applied a SHAP-based analysis.

RidgeCV models were trained on the IC-selected features, and linear SHAP values were computed on a representative sample to quantify the marginal contribution of each feature.

Features representing the top 95% of cumulative mean absolute SHAP values were added to the final feature set, creating a union of IC- and SHAP-selected features. This resulted in 78 features, balancing both linear correlation and model-derived importance.

For model training, we employed RidgeCV with a logarithmically spaced alpha range and applied GroupKFold cross-validation using dates as groups.

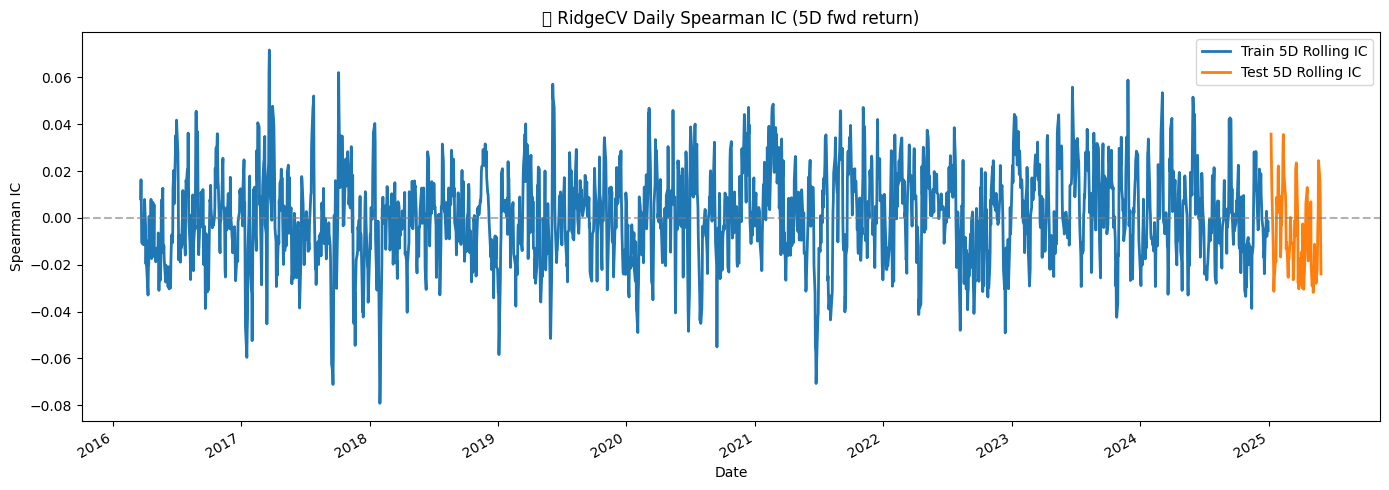

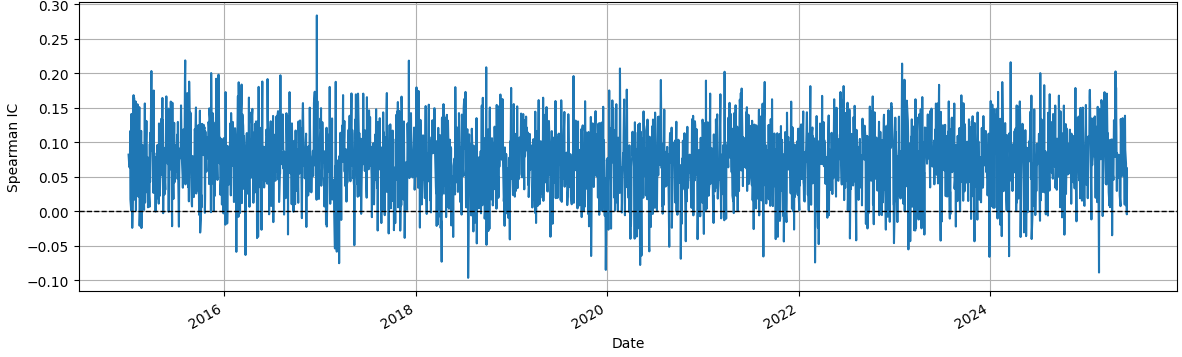

Standard scaling was performed per fold to normalize feature magnitudes. Out-of-fold predictions showed an RMSE of 0.0496, a slightly negative R² of -0.0011, and a small positive Spearman IC of 0.0421, reflecting the low but detectable predictive signal inherent in short-term return data.

The model consistently selected a high regularization parameter (average alpha 100.0), suggesting that the underlying signal is weak relative to noise, which RidgeCV mitigates by shrinking coefficients.

Finally, the model trained on the full training set and evaluated on the test set produced an RMSE of 0.0525, R² of -0.0058, and IC of 0.0024, confirming the modest predictive power observed in cross-validation.

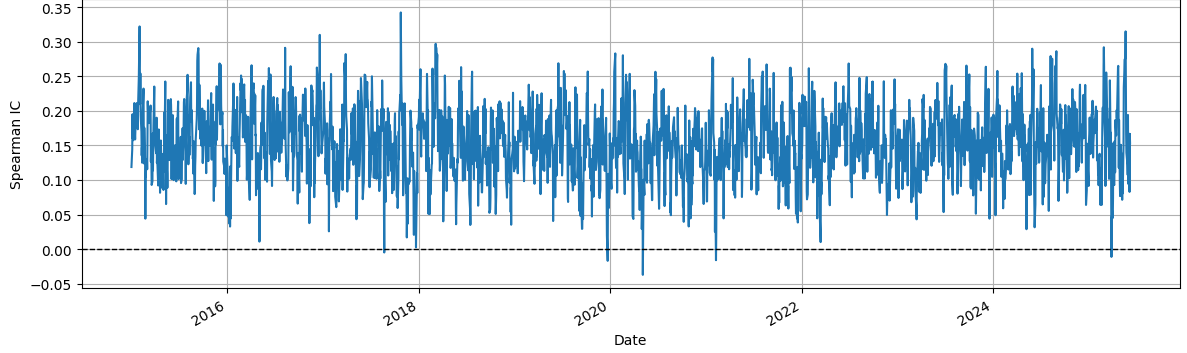

Daily IC was also computed and visualized, showing small, fluctuating correlations over time, consistent with the inherently noisy nature of financial returns. All intermediate predictions, metrics, and rolling daily IC plots were saved for reproducibility and further analysis.

Overall, the study demonstrates that combining IC-based and SHAP-based feature selection allows Ridge regression to capture subtle predictive signals in highly noisy financial data, although the overall explanatory power remains limited due to the stochastic nature of short-term returns.

Predicting 5-Day Forward Returns Using IC- and SHAP-Guided Ridge Regression

4.3 Predicting 1-Day Forward Returns Using IC- and SHAP-Guided ElasticNet Regression

We set out to predict 1-day forward returns using a combination of correlation-based and model-based feature selection, followed by ElasticNetCV regression.

The target variable, fwd_ret_1d, was first filtered to remove missing values, ensuring that both the features and target were aligned.

Corresponding date metadata was extracted to support grouped cross-validation, preserving the temporal structure of the data.

Initial feature selection relied on absolute Spearman correlation (IC) between each feature and the target.

Features contributing cumulatively to 95% of the total IC were retained, capturing those most linearly related to future returns.

To complement this, we trained an ElasticNetCV model on the IC-selected features and computed linear SHAP values on a representative sample of 5,000 observations.

Features contributing to the top 95% of mean absolute SHAP values were identified. The final feature set was the union of IC- and SHAP-selected features, resulting in 102 features that combined linear correlation with model-driven importance.

We then performed cross-validated out-of-fold (OOF) predictions using a GroupKFold strategy with dates as groups. Standard scaling was applied per fold to normalize feature magnitudes.

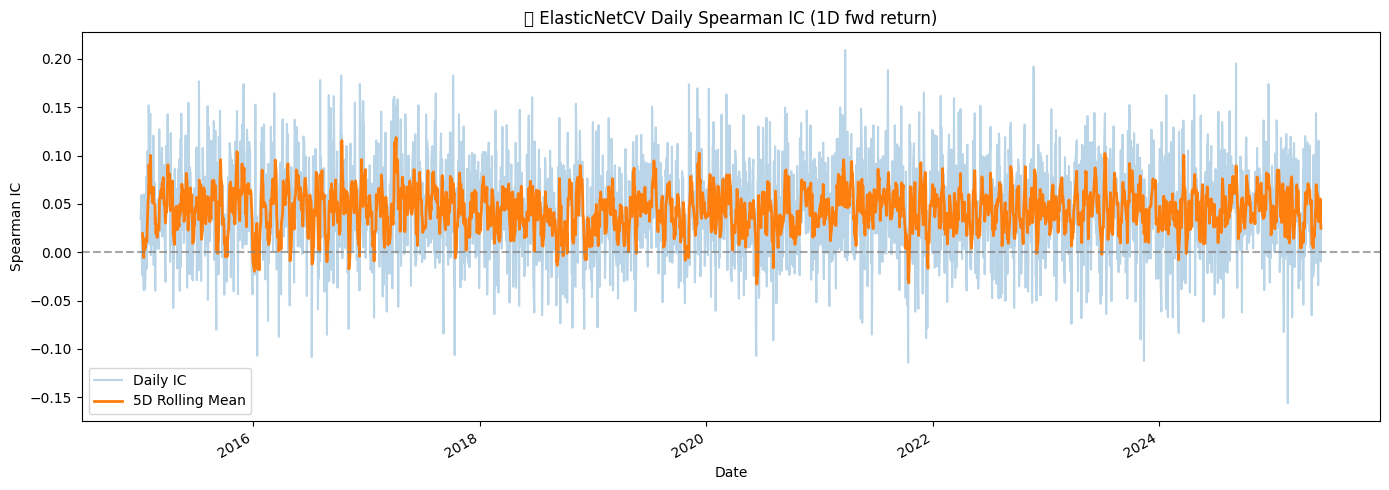

Each fold was trained with ElasticNetCV across multiple l1_ratio values. The resulting fold RMSEs were 0.0215 and 0.0246, with the model consistently selecting a very small regularization parameter (average alpha ≈ 0.000001), reflecting the low signal-to-noise ratio typical of short-term returns.

The final OOF predictions yielded an RMSE of 0.0226, R² of 0.0053, and Spearman IC of 0.0442, indicating modest predictive power.

Daily IC was also computed to examine temporal stability, showing small but persistent correlations between predicted and realized returns.

All intermediate outputs, including OOF predictions, metrics, and daily IC, were saved for reproducibility.

Overall, the study demonstrates that combining IC- and SHAP-based feature selection with ElasticNetCV allows the extraction of subtle signals in highly noisy financial data, although the short-term predictability remains limited.

Predicting 1-Day Forward Returns Using IC- and SHAP-Guided ElasticNet Regression

4.4 Predicting 21-Day Forward Returns Using IC- and SHAP-Guided ElasticNet Regression

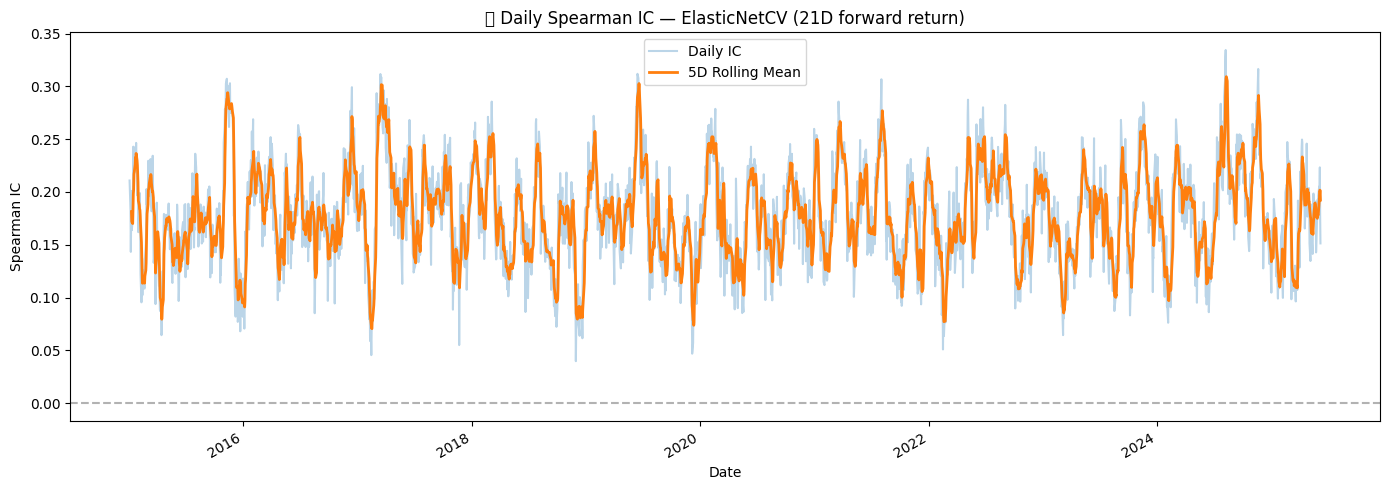

To predict 21-day forward returns, we began by filtering the dataset to include only valid observations for the target variable fwd_ret_21d, while also aligning feature values and corresponding dates. This alignment ensured that grouped cross-validation could later respect the temporal structure of the data.

We first applied correlation-based feature selection, computing the absolute Spearman correlation (IC) between each feature and the target. Features contributing cumulatively to 95% of the total IC were retained. This approach prioritizes variables with the strongest linear association with future returns. Next, we trained an ElasticNetCV model on these IC-selected features and computed linear SHAP values to capture each feature’s contribution to the model predictions. Features responsible for the top 95% of mean absolute SHAP values were selected. The final feature set combined the IC- and SHAP-selected features, yielding 103 features.

Using this feature set, we performed 3-fold GroupKFold cross-validation with dates as the grouping variable, ensuring temporal separation between training and validation sets. Each fold was trained with ElasticNetCV across multiple l1_ratio values. The model’s regularization parameters remained small (average alpha ≈ 0.000914), reflecting modest but measurable predictive signal over 21 days. The out-of-fold predictions achieved an RMSE of 0.0977, an R² of 0.0465, and a Spearman IC of 0.1783, indicating improved predictability compared to the 1-day forward return model.

Finally, we computed daily IC to assess the temporal stability of the predictions. Visual inspection with a rolling 5-day mean revealed periods of slightly higher correlation, suggesting that the model captures some persistent predictive structure in the 21-day horizon. All outputs, including OOF predictions, metrics, daily IC values, and plots, were saved for reproducibility and further analysis.

Predicting 21-Day Forward Returns Using IC- and SHAP-Guided ElasticNet Regression

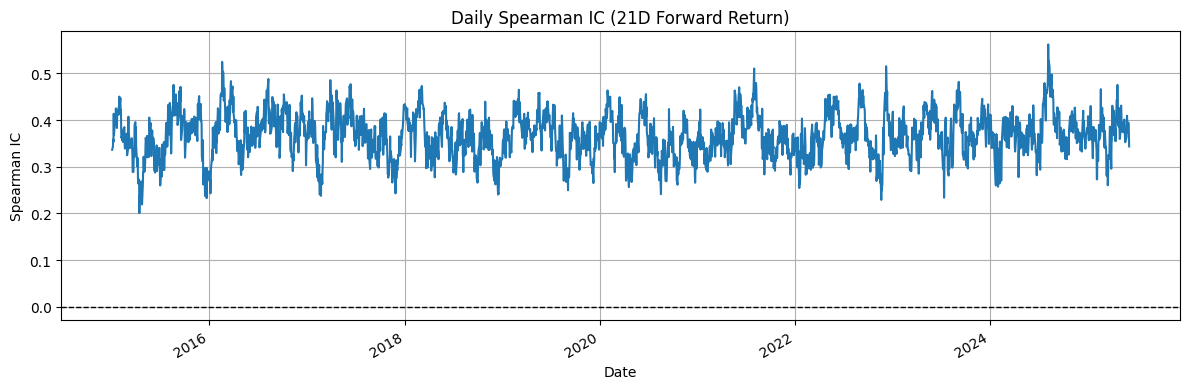

4.5 XGBoost Modeling of 21-Day Forward Returns with IC- and SHAP-Based Feature Selection

We investigated the predictability of 21-day forward returns using a combination of correlation-based and model-driven feature selection, followed by XGBoost Modeling.

The target variable, fwd_ret_21d, was first filtered to exclude missing values, and feature matrices were aligned accordingly. Metadata containing dates was used to ensure temporal integrity, allowing cross-validation to respect the grouping structure of observations by date.

Initial feature selection relied on the absolute Spearman correlation (IC) between each feature and the target. Features accounting for 95% of the cumulative absolute IC were retained, capturing the most directly correlated signals.

To complement this correlation-based approach, we trained an XGBoost model on the IC-selected features and computed tree-based SHAP values on a representative subset of the data. Features contributing to the top 95% of mean absolute SHAP values were extracted.

The final feature set was defined as the union of IC- and SHAP-selected features, resulting in 103 predictors that combined correlation-based relevance with model-inferred importance.

We then conducted out-of-fold predictions using a three-fold GroupKFold cross-validation, ensuring that samples from the same date were not split between training and validation.

Standard scaling was applied to all features to normalize their ranges. Within each fold, XGBoost was optimized over multiple l1_ratio values, yielding small but nonzero regularization parameters (average alpha ≈ 0.000914). Fold-level RMSE ranged from 0.0984 to 0.0990, reflecting the inherent noise in 21-day forward returns.

The combined out-of-fold predictions across all folds achieved an overall RMSE of 0.0977, R² of 0.0465, and a Spearman IC of 0.1783, indicating modest predictive ability.

Daily Spearman IC was also examined, revealing small but consistent correlation between predicted and realized returns, and suggesting that the model captured temporal patterns in the forward return signal.

All intermediate outputs, including OOF predictions, performance metrics, and daily IC series, were saved for reproducibility and further analysis.

In conclusion, the results demonstrate that combining IC- and SHAP-based feature selection with XGBoost enables extraction of weak but detectable signals from high-dimensional, noisy financial data over a 21-day horizon.

While the predictive power remains limited, the approach highlights the utility of integrating linear correlation with model-driven importance to enhance feature selection in quantitative return forecasting.

XGBoost Modeling of 21-Day Forward Returns with IC- and SHAP-Based Feature Selection

4.6 XGBoost Modeling of 5-Day Forward Returns with IC- and SHAP-Based Feature Selection

We now set out to forecast 5-day forward returns using a large feature set, focusing on both accuracy and interpretability. After cleaning the data and aligning features with dates, we applied a two-stage feature selection: Spearman IC to capture directly correlated signals and XGBoost SHAP to identify features with the greatest model impact, retaining 102 predictors from their union. Hyperparameters were tuned via Optuna with GroupKFold, yielding an RMSE of 0.0474, R² of 0.0892, and a Spearman IC of 0.155. Daily IC analysis confirmed stable predictive power (mean 0.1545, std dev 0.0508). All outputs—including predictions, metrics, and IC plots—were saved, resulting in a concise and interpretable model that captured meaningful signals and demonstrated robustness over time.

XGBoost Modeling of 5-Day Forward Returns with IC- and SHAP-Based Feature Selection

4.7 XGBoost Modeling of 1-Day Forward Returns with IC- and SHAP-Based Feature Selection

In our study, we aimed to predict 1-day forward returns using a large universe of alpha signals. The focus was on both predictive performance and identifying which features meaningfully contributed to the forecasts.

All features and target returns were cleaned to remove infinities and clipped to avoid extreme values. Dates were carefully aligned to ensure temporal consistency.